Keep Your Data Local: Using Ollama and AnythingLLM for Private AI Interactions

Ever feel hesitant to upload your documents to the cloud, like a research paper you need summarized or a contract from which you need to extract key points? In today's data-driven world, privacy concerns are paramount. This guide explores the powerful combination of Ollama and AnythingLLM, allowing you to leverage AI for private interactions with your documents while keeping your data firmly within your own control. Dive in and discover the power of private AI document analysis!

What Is Ollama?

Ollama is a groundbreaking open-source platform that empowers users to run large language models (LLMs) like Llama 2 and Llama 3 directly on their local devices. Unlike cloud-based AI solutions, Ollama ensures that sensitive data never leaves the user's control, mitigating the risks associated with unauthorized access or data breaches.

At the core of Ollama's design is a commitment to privacy and security. By enabling local deployment of LLMs, the platform eliminates the need to rely on external servers, providing users with complete control over their data. This approach is further reinforced by Ollama's support for granular permission settings, allowing multiple users to collaborate securely while maintaining individual control over confidential information.

What is AnythingLLM?

Complementing Ollama's capabilities, AnythingLLM is an open-source all-in-one AI desktop and Docker application. AnythingLLM seamlessly integrates with Ollama, providing users with a comprehensive and user-friendly interface to interact with their locally-hosted LLMs.

One of the standout features of AnythingLLM is its extensive customization capabilities. Users can tailor the application's appearance to their preferences, ensuring a seamless and intuitive user experience. Additionally, the application offers a comprehensive developer API, empowering users to integrate it with their existing workflows and tools.

AnythingLLM's versatility extends beyond just the user interface. The application supports a diverse array of document types, including PDFs, Word documents, and other business-related formats, allowing users to leverage their entire knowledge base for AI-driven insights and automation.

Combining Ollama and AnythingLLM for Private AI Interactions

The true power of Ollama and AnythingLLM lies in their seamless integration, which unlocks a world of possibilities for private and personalized AI experiences. By following these steps, you can run LLMs locally using Ollama and interact with them through the intuitive AnythingLLM interface to enjoy the benefits of advanced AI capabilities without compromising your data privacy.

Step 1: Install Ollama

1. Visit the Ollama website and download the appropriate version for your operating system (macOS, Linux, or Windows)

2. Run the installer and follow the on-screen instructions to complete the seamless installation process.

Step 2: Start the Ollama Server

1. Open a terminal or command prompt.

2. Navigate to the directory where you installed Ollama.

3. Run the following command to start the Ollama server:

4. The Ollama server will start running on `localhost:11434`.

Step 3: Install AnythingLLM

1. Visit the AnythingLLM website and download the desktop version for your operating system (macOS, Windows, or Linux).

2. Run the installer and follow the on-screen instructions to complete the easy installation process.

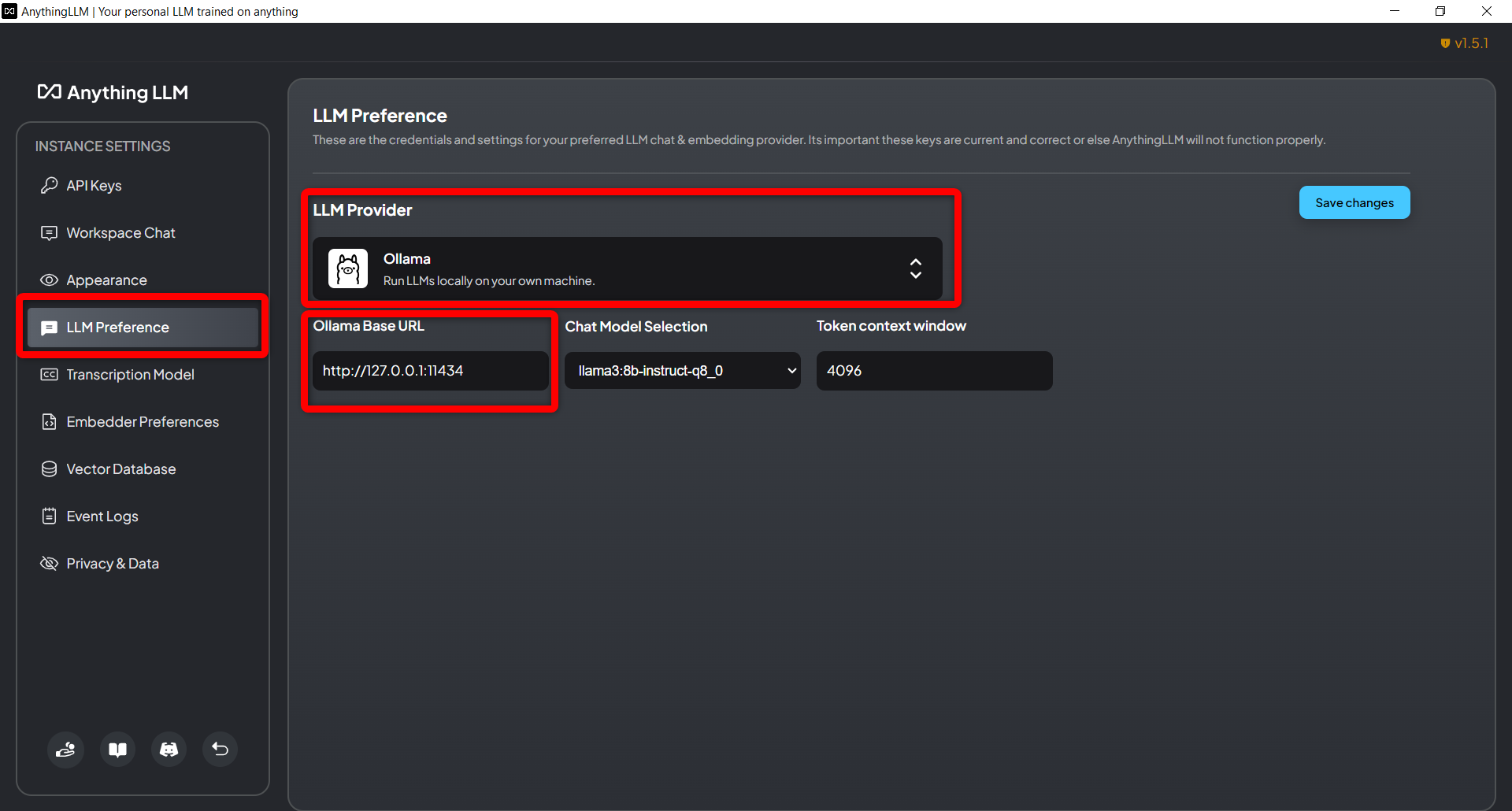

Step 4: Configure AnythingLLM to Use Ollama

1. Open AnythingLLM on your desktop.

2. Navigate to the settings and select "LLM Preference".

3. Choose "Ollama" as your LLM Provider.

4. Enter the base URL of the running Ollama server: `http://127.0.0.1:11434`.

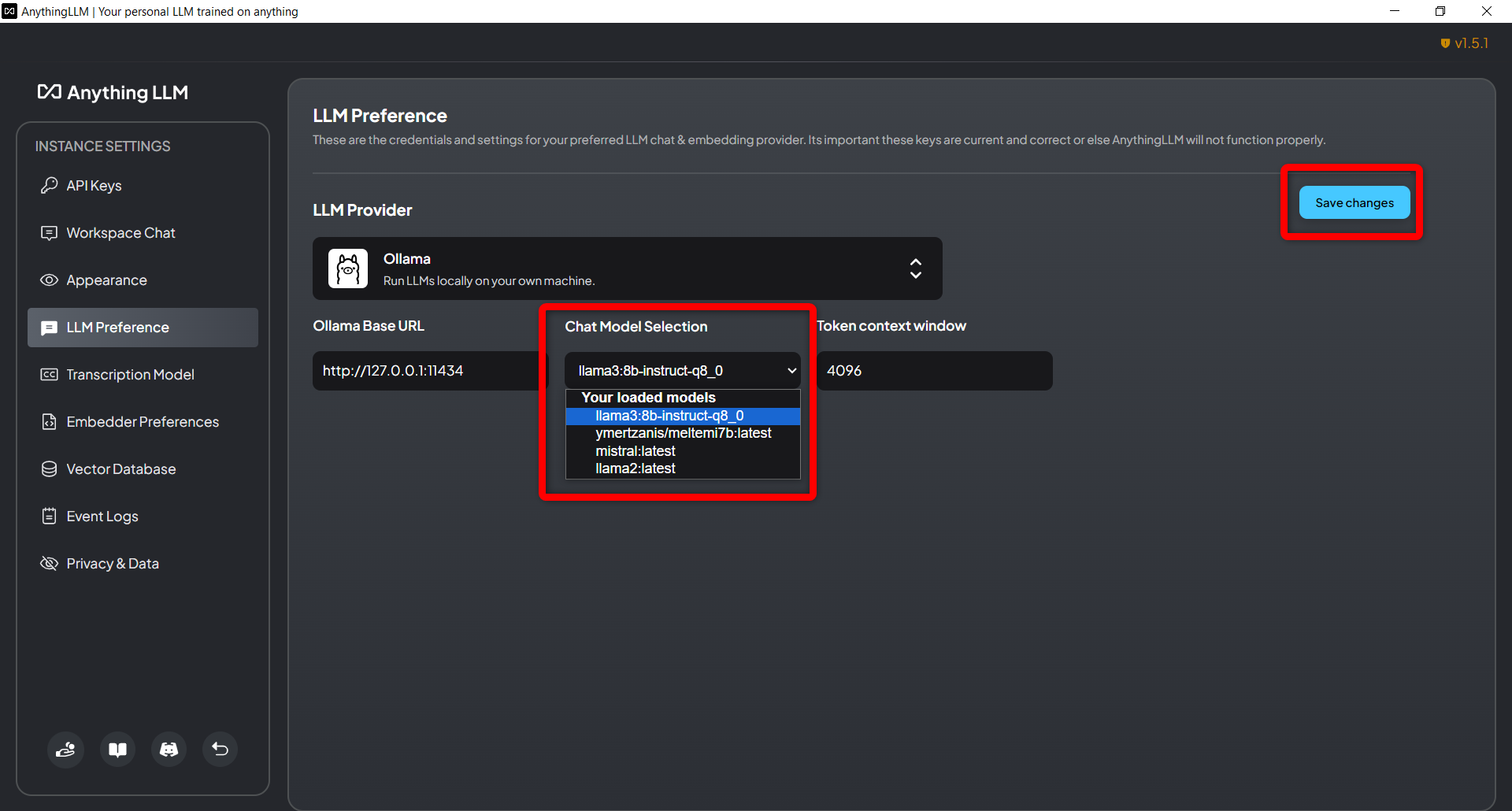

Step 5: Select and Configure LLMs in Ollama

1. In the "Chat Model Selection", you should see the available models listed.

2. Choose the desired LLM to use with AnythingLLM.

3. Click "Save changes" to apply the changes.

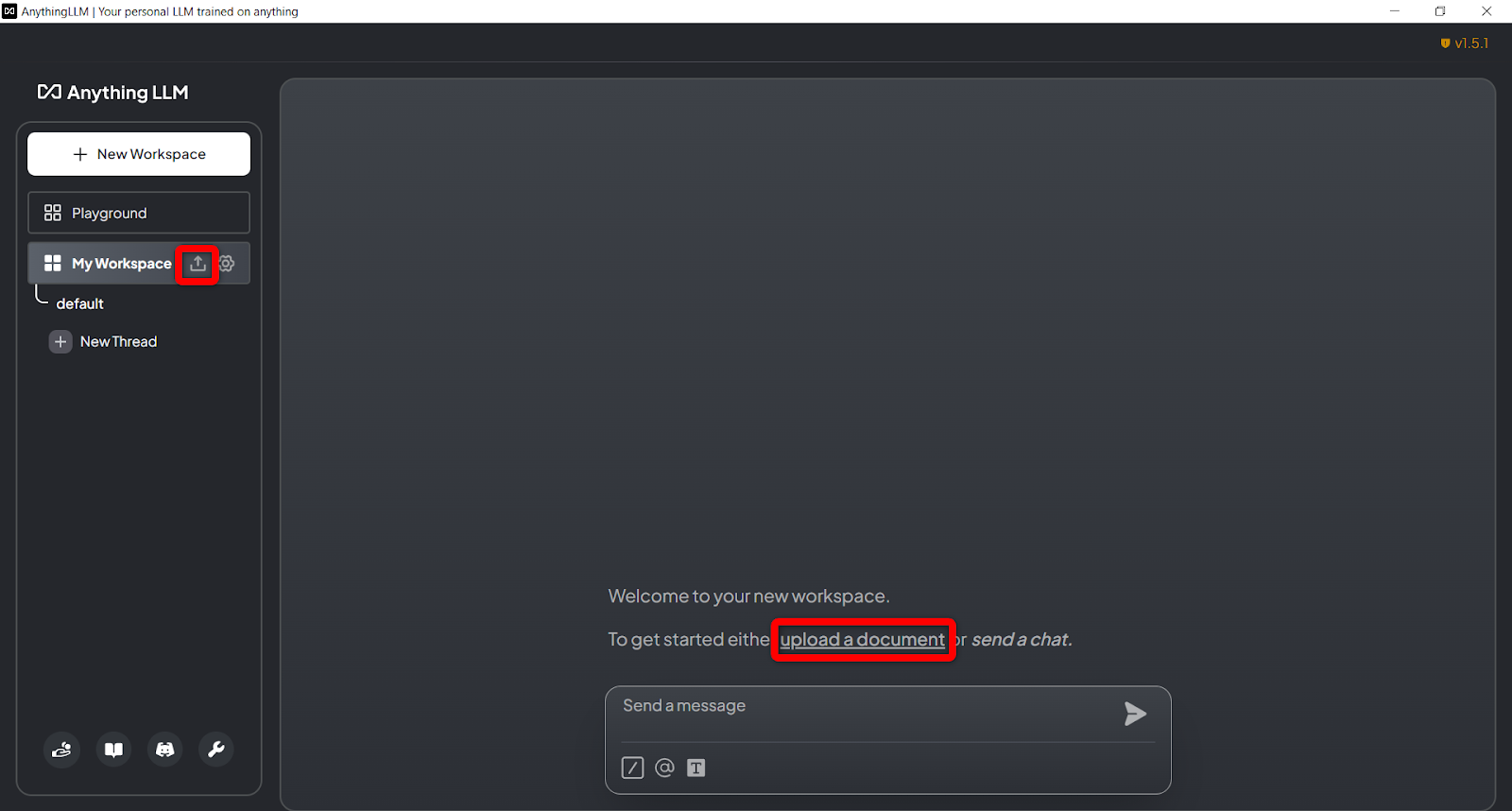

Step 6: Upload Your Documents to AnythingLLM

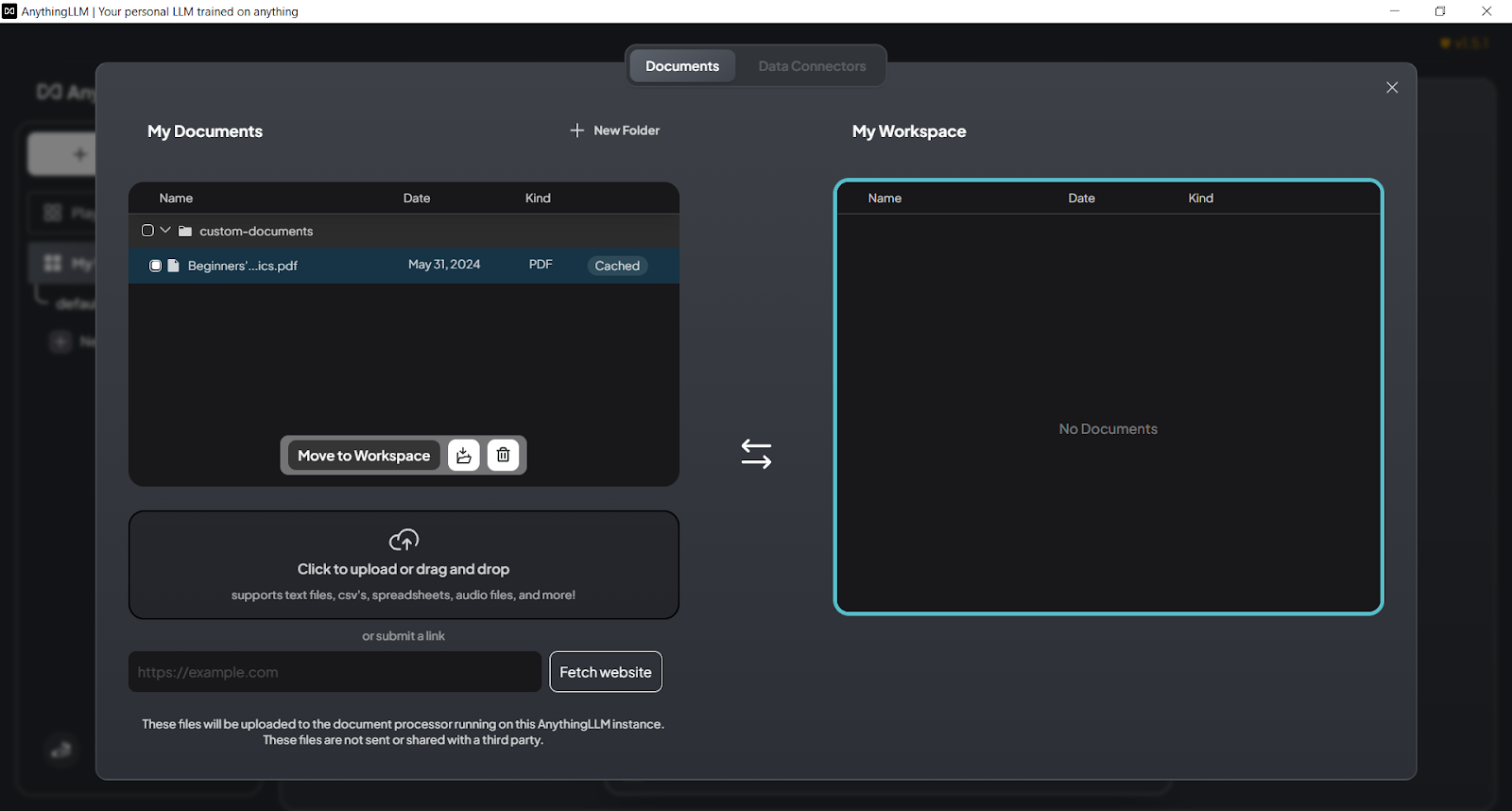

1. In the AnythingLLM interface, navigate to your Workspaces section.

2. Select the Workspace where you want to upload your documents.

3. Click on the "upload a document" option on the chat window or click the upload symbol next to your Workspace name.

4. Drag and drop your documents (e.g., PDFs, Word files, text files) into the upload area or click to upload files from your local file system.

5. From the documents that you uploaded, select the ones you want, and click “Move to Workspace”

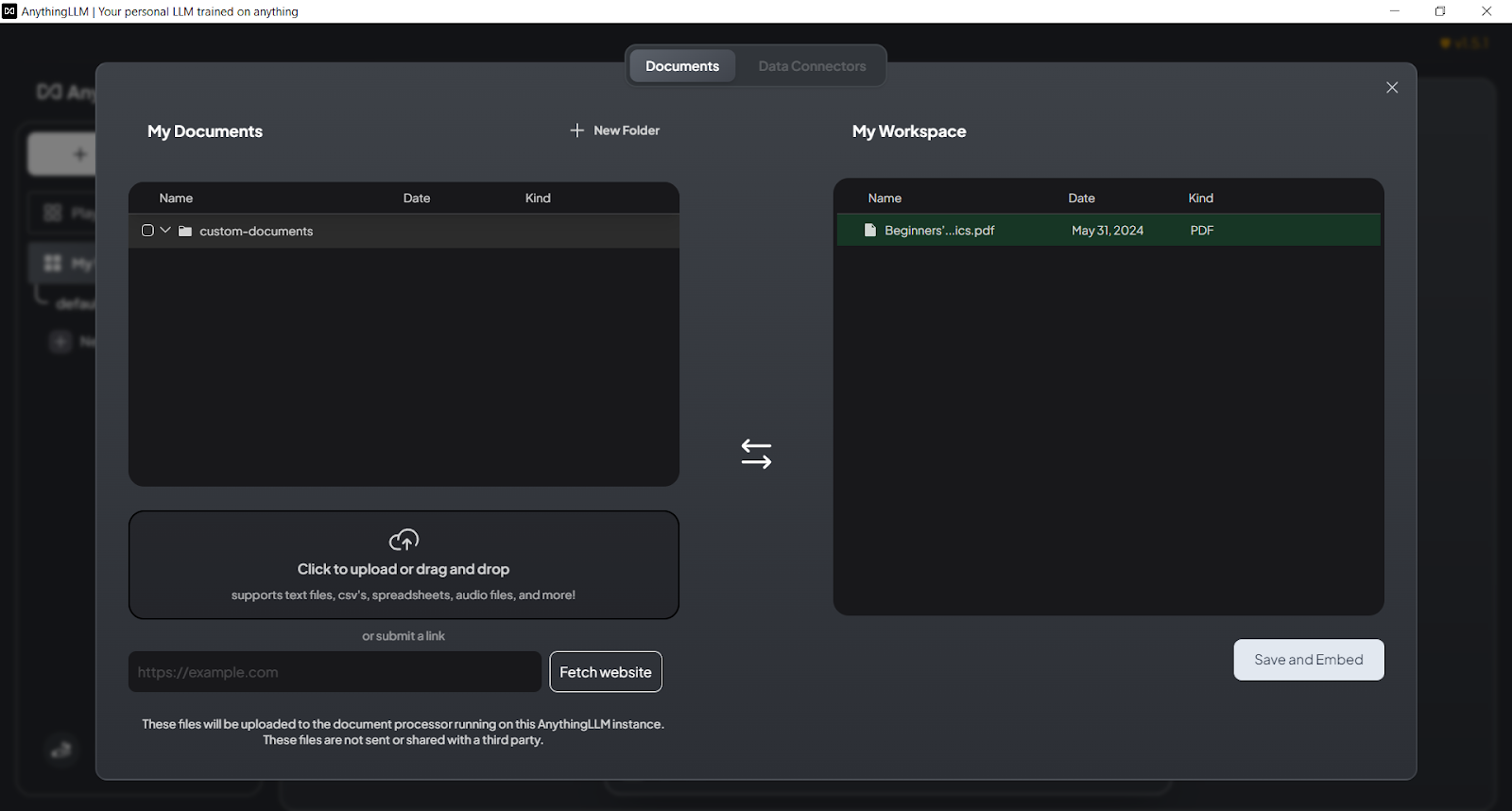

6. Wait for the documents to be processed and indexed by AnythingLLM, then click on “Save and Embed.”

7. Once this is complete, you can start chatting with your documents using the AI capabilities provided by the locally-hosted LLMs from Ollama.

Now that you have integrated Ollama and AnythingLLM, you can start interacting with your locally-deployed language models through the AnythingLLM interface. The platforms offer a suite of tools for shaping the behavior and outputs of the LLMs, ensuring a highly personalized AI experience while keeping your data secure on your local machine.

Key Advantages of the Ollama and AnythingLLM Combination

The combination of Ollama and AnythingLLM offers several key advantages that make them an ideal choice for private document interaction:

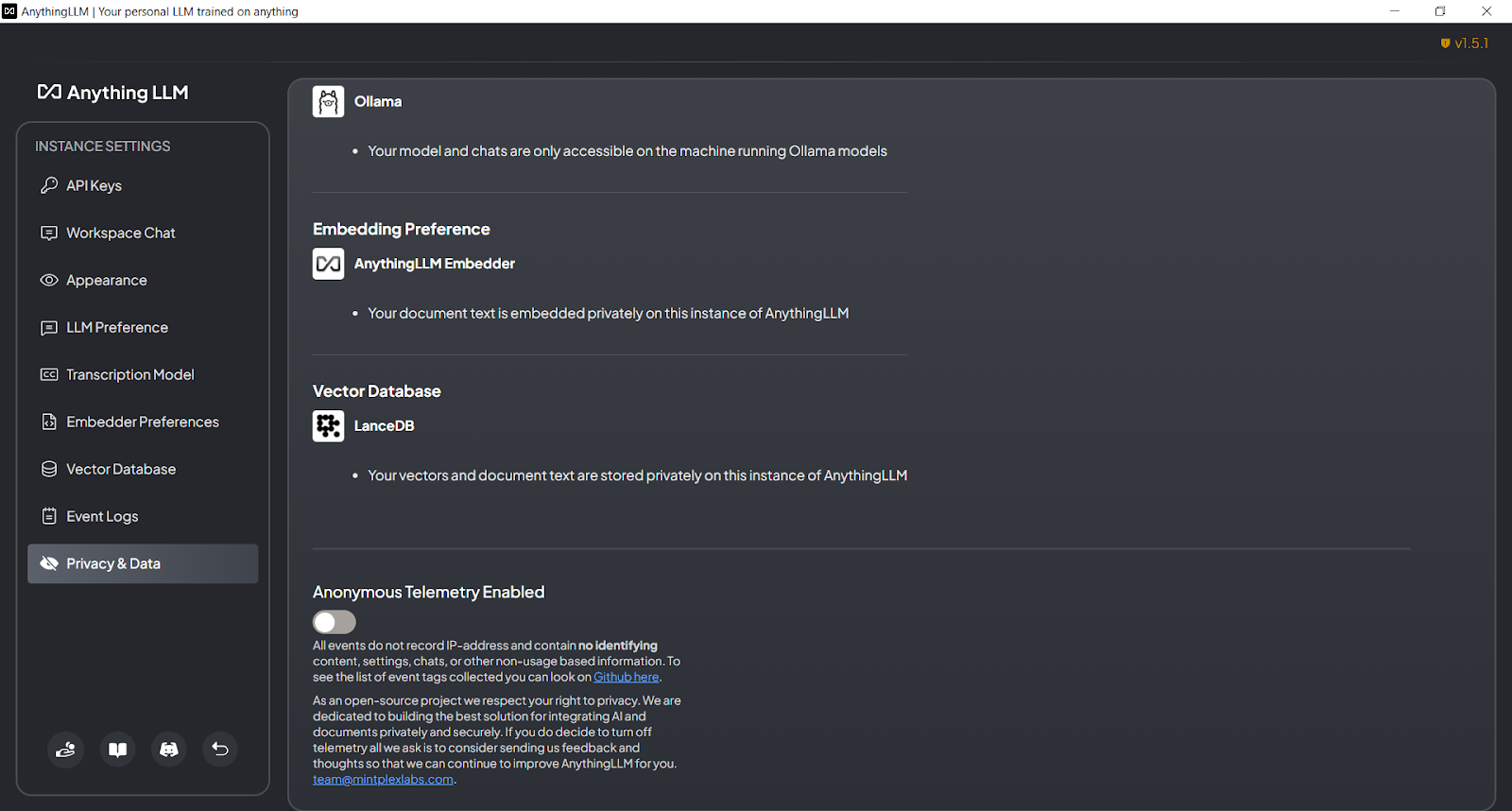

Data Privacy and Security

The primary benefit of using Ollama and AnythingLLM is data privacy and security. By running LLMs locally on the user's device, Ollama ensures that sensitive information never leaves the user's control, mitigating the risks associated with cloud-based solutions. AnythingLLM further enhances this privacy-focused approach by only communicating with explicitly connected services, avoiding any unintended cloud-based interactions. This combination of local execution and secure communication provides users with the peace of mind that their data is protected from unauthorized access or data breaches.

Customization and Flexibility

Ollama and AnythingLLM offer unparalleled customization and flexibility, empowering users to tailor their AI experiences to their unique needs. The ability to choose from a variety of LLM providers, including proprietary models like GPT-4, custom models, and open-source options like Llama and Mistral, allows users to select the most suitable language model for their specific use cases.

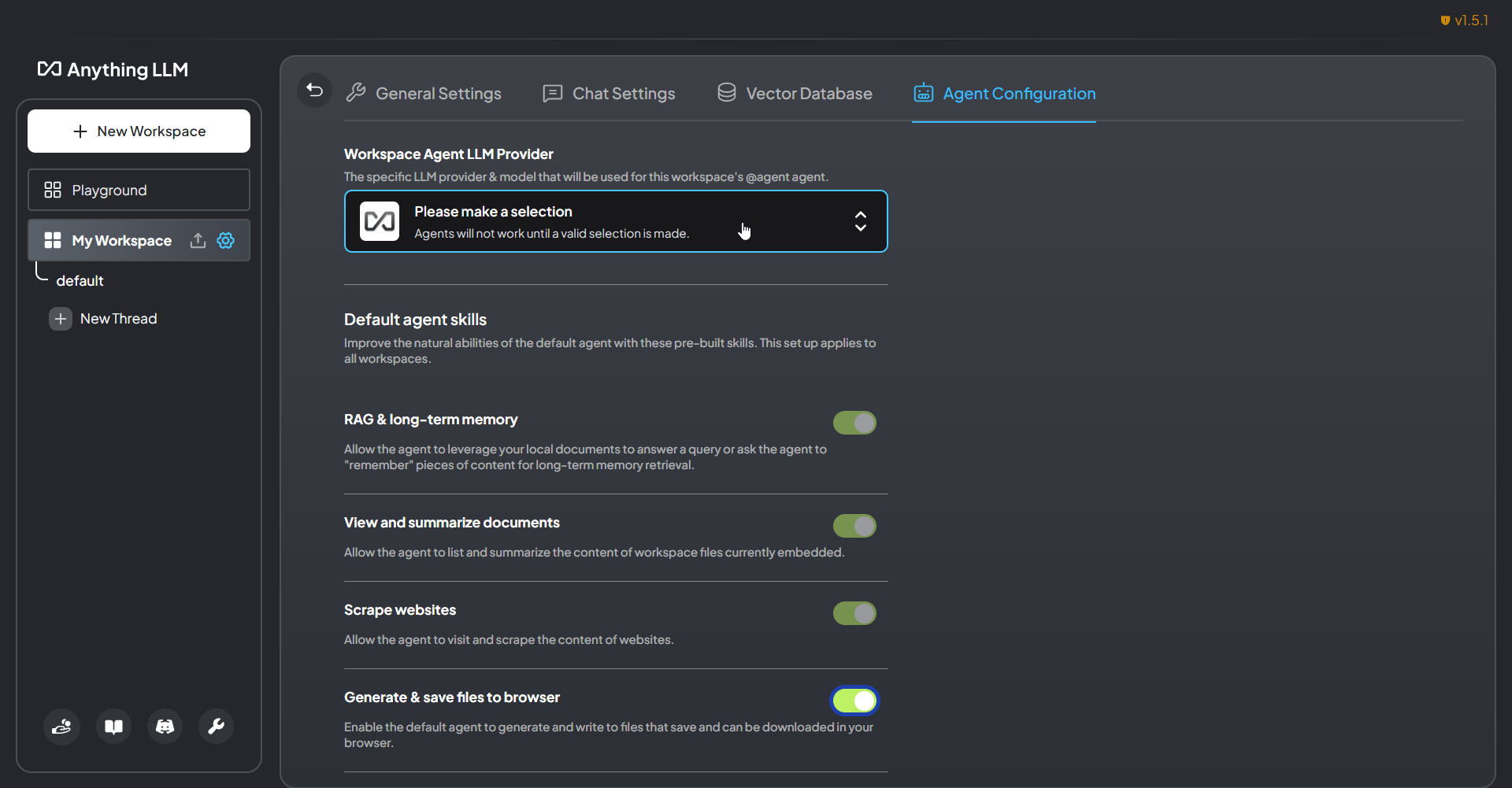

Furthermore, the advanced customization capabilities of AnythingLLM enable users to fine-tune the language models through prompt engineering, few-shot learning, and fine-tuning processes. This level of control ensures that the AI agents created within the platform are highly specialized and aligned with the user's requirements.

Enhanced Capabilities

The integration of Ollama and AnythingLLM unlocks a range of advanced capabilities that empower users to interact with their data in innovative ways. The platforms' support for Retrieval-Augmented Generation (RAG) allows users to engage with a diverse range of document types and media, seamlessly blending information retrieval and language generation.

AnythingLLM further extends these capabilities by offering features like web scraping, document summarization, and live web searches, all powered by the locally-hosted LLMs from Ollama. By leveraging the unique features of Ollama and AnythingLLM, users can enjoy the benefits of advanced AI capabilities while maintaining full control over their data.

Potential Concerns and Limitations

While the combination of Ollama and AnythingLLM offers a compelling solution for private AI interactions, it's important to consider some potential concerns and limitations.

- Hardware Requirements: Running LLMs locally can be resource-intensive, requiring powerful hardware such as high-performance CPUs or GPUs. Users with less capable devices may experience performance issues or limitations in the size and complexity of the models they can effectively run. This hardware requirement could present a barrier for some users, particularly those with older or lower-end computers.

- Model Availability and Compatibility: The availability and compatibility of LLM models within the Ollama and AnythingLLM ecosystem is another consideration. While both platforms support a variety of models, including proprietary, custom, and open-source options, the range of models may be more limited compared to cloud-based AI services.

- Ongoing Maintenance and Updates: As the AI landscape continues to evolve, users will need to stay up-to-date with the latest versions and capabilities of Ollama and AnythingLLM. This may require regular software updates, model downloads, and potentially the need to migrate to newer versions of the platforms. Keeping the system current and ensuring seamless integration between the components could become an ongoing challenge.

- Potential Performance Trade-offs: By running LLMs locally, users may experience some performance trade-offs compared to cloud-based AI services. Factors such as model size, hardware capabilities, and network latency could impact the overall responsiveness and processing speed of the system. Users may need to carefully balance their privacy and security requirements with the desired level of performance.

Closing Remarks

By leveraging the synergy between Ollama and AnythingLLM, you can enjoy the power of AI while keeping your data firmly under your control. This local-first approach not only enhances data privacy and security but also offers extensive customization and flexibility.

While there are considerations such as hardware requirements and ongoing maintenance, the benefits of enhanced security, customization, and advanced capabilities make this an appealing choice for many users, paving the way for a future where privacy and personalization are central to the AI revolution.

Similar posts

Start your 30-day free trial