A comprehensive guide for fine-tuning a GPT-3 model

Note: In recent years, OpenAI has shifted its product emphasis toward chat-completion models such as the GPT-3.5 and GPT-4 series, which are optimized for conversational multi-message interfaces rather than the “prompt → completion” style of earlier GPT-3 models. As a result, this guide is explicitly scoped for the older GPT-3 family (e.g. davinci, curie, babbage, ada) and does not apply directly to GPT-3.5, GPT-4, GPT-4o, GPT-5, or other newer models.

Introduction

As businesses increasingly rely on data-driven insights to inform decision-making, the demand for powerful natural language processing (NLP) models has never been higher. OpenAI's Generative Pre-trained Transformer 3 (GPT-3) is one of the most advanced and widely-used NLP models on the market today. While GPT-3 models are incredibly accurate and versatile out-of-the-box, fine-tuning can further enhance their capabilities.

In this guide, we will explore the benefits of fine-tuning a GPT-3 model for analytics insights, the process of preparing for and implementing fine-tuning, and best practices for optimizing your fine-tuned models. If you are new to fine-tuning, this post will provide you with the information you need to get started with fine-tuning a GPT-3 model and unlock its full potential.

Benefits of Fine-Tuning a GPT-3 model for Analytics

A GPT-3 model can generate human-like text, answer questions, complete sentences, and much more. However, fine-tuning a GPT-3 model can provide even more benefits for analytics, including:

A. Enhanced accuracy and relevance of results

Fine-tuning the model on your own data can further enhance its accuracy and relevance to your specific needs. By training the model on your own data, you can teach it to understand the language and terminology specific to your industry or domain, and improve its ability to provide insights that are relevant to your business.

B. Customization of model for specific tasks

By fine-tuning the model for a specific task, you can significantly improve its performance and reduce the amount of time and effort required to process and analyze large volumes of data compared to a generalized model. For example, when analyzing a spike in your digital analytics trends, a generalized model would likely produce general root causes, requiring additional manual intervention for research, which increases the time and effort required; not to mention that in many cases the suggestions could disorientate the analyst from realizing the root cause. In contrast, a fine-tuned model produces more accurate results, reducing the need for manual intervention and the amount of time required to complete the task because the suggestions include past experiences from resolved cases.

C. Real-world use of fine-tuned GPT-3 models in analytics

In the analytics industry, it's crucial to remember that the numbers we see can have many different meanings depending on the specific operations of the business. While it's helpful to be aware of industry benchmarks and trends, it's equally important to recognize that generalized insights may not apply to your particular business or use case. This is where fine-tuning your GPT-3 model can be particularly useful, as it allows you to incorporate your own business knowledge and experience into the analytics process. By training the model on your own data and using it to generate insights tailored to your specific needs, you can gain a more accurate and nuanced understanding of your business operations and make more informed data-driven decisions.

Preparing for Fine-Tuning a GPT-3 model

Before you begin fine-tuning a GPT-3 model, it's important to take a few key steps to prepare your data and ensure that you have the necessary resources and tools to complete the process. Here are the steps to follow:

A. Define your objectives and tasks

Before you begin fine-tuning, it's important to define your objectives and tasks. This means deciding what you want to accomplish with your fine-tuned model and how you plan to measure its success. For example, you might want it to recommend follow-up actions on anomalies found in your digital analytics data for various channels and metrics based on previous experiences.

B. Set up your working environment

To interact with OpenAI’s API and prepare your data, you’ll need to install the required libraries in your working environment. You can do this by running the command “pip install --upgrade openai” for the OpenAI package. Typically, you’ll also need to install a data preparation library like pandas.

C. OpenAI account and API key

You'll also need to create an account with OpenAI and generate an API key. This allows you to prepare the jsonl file required as input for the fine-tuning process and start a fine-tuning job. You can easily generate an API key by logging in to your account, clicking on your avatar, going to “View API keys”, and clicking on “Create new secret key”. Be aware that you will also need to add credits to your account since fine-tuning is not free.

D. Gather and clean your data

The first step in preparing for fine-tuning a GPT-3 model is to gather and clean your data. This means collecting the data you want to use for fine-tuning and ensuring that it is free from errors or inconsistencies. This is the most important step as the expected results will be based on the instructions you give the model. You also need to ensure that it's in a format that the API can understand for this task. Think of the format as an Excel spreadsheet with two columns named “prompt” and “completion,” representing the input or question to the model and the answer it produces.

E. Validate your data

Finally, it's important to test and validate your data and model before starting fine-tuning. This involves ensuring your data is properly formatted and labeled. The validation step is often a manual process involving multiple team members.

Fine-Tuning a GPT-3 model

Ready to dive into the implementation? Let's do it! First up: preparing your data. Here are some steps to help you get started:

A. Data preparation

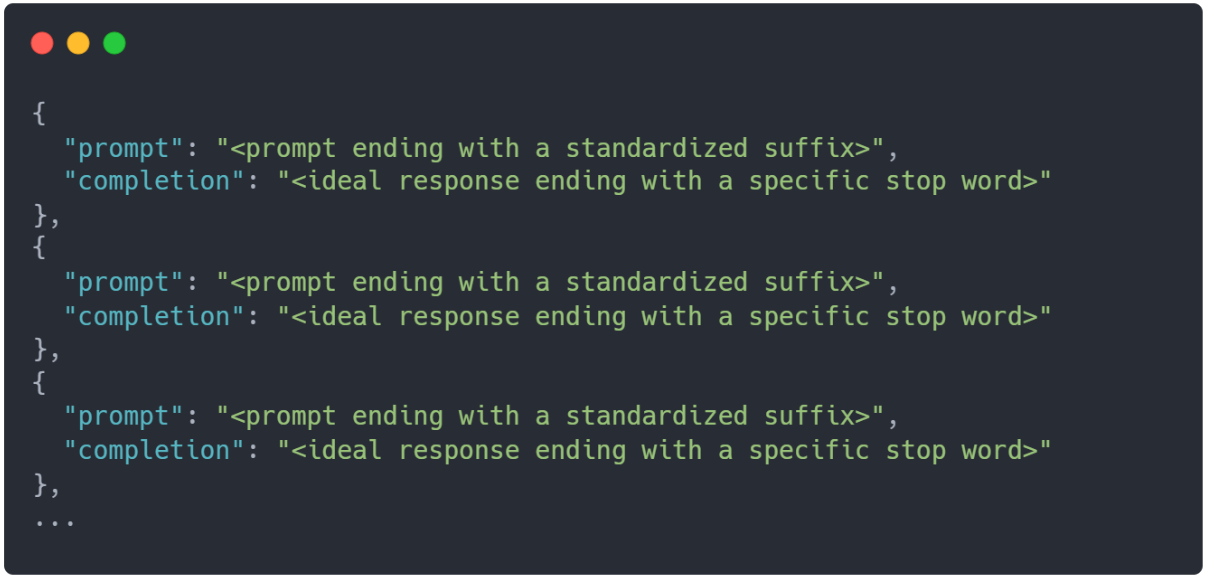

One of the keys to success in fine-tuning GPT-3 is to engineer your prompts effectively. This will help standardize the format of your prompts across all examples, which can increase the chances of producing a successful completion. Similarly, standardizing the format of your output can help ensure that you're getting the right length of text. Remember to add stop words to all the completions before starting the fine-tune job - OpenAI recommends using “###” at the end of all completion texts.

B. Augmenting your data

LLM models like the GPT-3 model family work best with many examples. To enhance your dataset and generate more examples for your analytics use case, you can leverage the power of GPT through the API. For example, you could use a short Python script that iterates through your examples and generates new ones that are similar to the ones you have gathered. This approach can help you create a larger dataset that can improve the accuracy and reliability of your analytics insights. For instance, if you're building an interactive application that allows users to input free text, it's important to enhance the prompt in order to better understand what the user is requesting. On the other hand, if you're looking for more variety in the responses generated by your model, then you may want to focus on enhancing the completion. This approach can help you create a larger dataset that can improve the accuracy and reliability of your analytics insights.

C. Converting to the right format

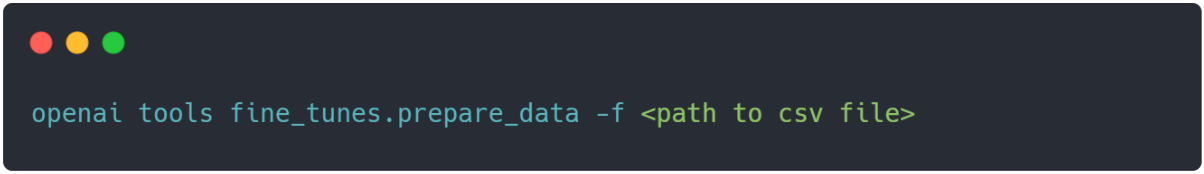

Once you have your data in a CSV or Excel file, you'll need to convert it to the jsonl format that OpenAI uses as input. Fortunately, they provide a tool that makes this process very easy. Just run the command shown below in your CLI, targeting your CSV file. Then decide whether to apply OpenAI’s recommendations for data preparation.

The final jsonl format of the produced file should look like this:

With these steps complete, you're ready to move on to the fine-tuning itself.

D. Starting the fine-tuning job

Once you've prepared your data and chosen a fine-tuning strategy, it's time to implement the fine-tuning process. Here are the steps involved:

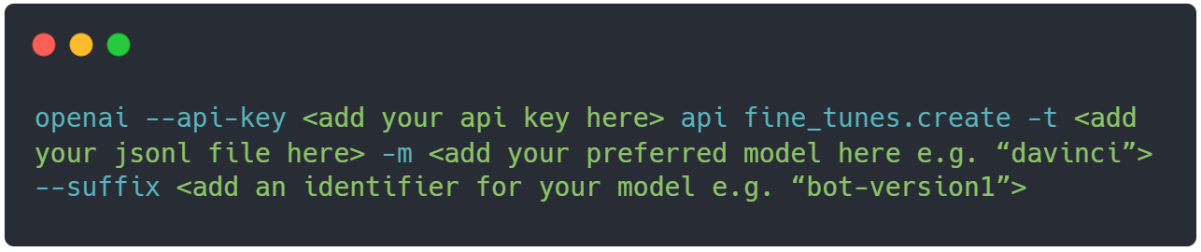

To start the fine-tuning job use the below CLI command:

Then you’ll have to wait for the fine-tuning job to finish.

After the fine-tuning job has been completed, evaluate the model's performance on a validation set similar to the training dataset you used. It's also recommended to test cases that the model is unfamiliar with to identify areas for improvement. Running multiple iterations of testing as you are working on improving the model and keeping statistics for its performance is a good practice. It's important to distinguish statistics for cases similar to the training dataset from those that were not similar.

Best Practices for Fine-Tuning a GPT-3 model

To optimize the results of your fine-tuning efforts, it's important to follow best practices for preparing your data and implementing your fine-tuning strategy. Here are some tips to help you get the most out of your fine-tuning process based on what has been covered so far:

A. Data Preparation Best Practices

Clean and format your data

Data quality is at the heart of every business operation. Before starting the fine-tuning job, it's important to clean and format your data to ensure it's in a format that the API can understand. This involves removing duplicates, correcting spelling errors, and standardizing formatting.

Augment your data

It’s true that LLM models work better when provided with more examples. If anything OpenAI documentation clearly states the below.

“The more training examples you have, the better. We recommend having at least a couple hundred examples. In general, we've found that each doubling of the dataset size leads to a linear increase in model quality.”

Consider augmenting your data to increase the diversity and amount available for fine-tuning.

B. Fine-Tuning Implementation Best Practices

Start with a small amount of data

When fine-tuning a GPT-3 model, it's best to start with a small amount of data and gradually increase the amount of data as needed. Don’t forget that this is not a free process.

Experiment with different prompt formats

For example, you can either have a whole paragraph used as a prompt, or you can have detailed specific information. A whole paragraph can have different advantages like giving more general context, but detailed information can state a problem clearer. Here you should also consider the cost of fine-tuning and how this can get out of hand while creating different versions. Smaller prompts can help reduce the cost as they require fewer tokens.

Model selection

Fine-tuning is currently only available for the following base models: davinci, curie, babbage, and ada. Keep in mind that the cost of fine-tuning can increase significantly by using more expensive models like davinci. Test out the results with other models too and you might be surprised.

Conclusion

In this article, we've explored the benefits of fine-tuning a GPT-3 model and provided a step-by-step guide on how to do that. We've also covered best practices for preparing your data and implementing your fine-tuning strategy to optimize the results of your efforts.

By fine-tuning a GPT-3 model, you can leverage the power of natural language processing to generate insights and predictions that can help drive data-driven decision making. Whether you're working in marketing, finance, or any other industry that relies on analytics, LLM models can be a powerful tool in your arsenal.

As the field of artificial intelligence continues to evolve, the possibilities for fine-tuning an LLM model for analytics will only continue to grow. By staying up-to-date on the latest developments and following best practices for fine-tuning, you can ensure that you're making the most of this powerful technology.

So, why not give fine-tuning a GPT-3 model a try for your next analytics project? By following the steps outlined in this article and experimenting with different strategies, you may be surprised by the insights and predictions you're able to generate.

Similar posts

Start your 30-day free trial